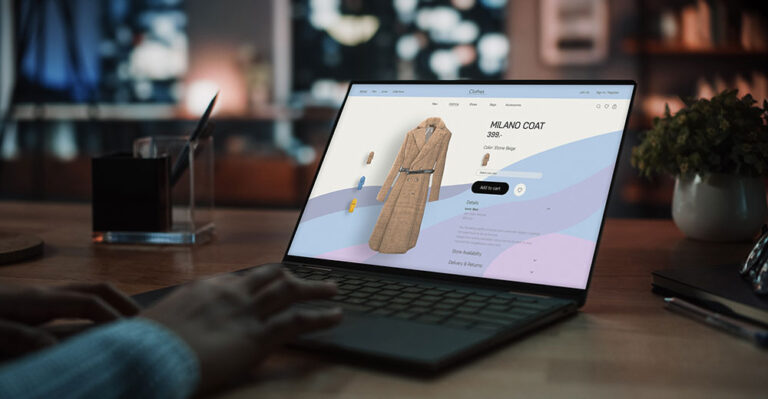

How to choose a theme for your WooCommerce store

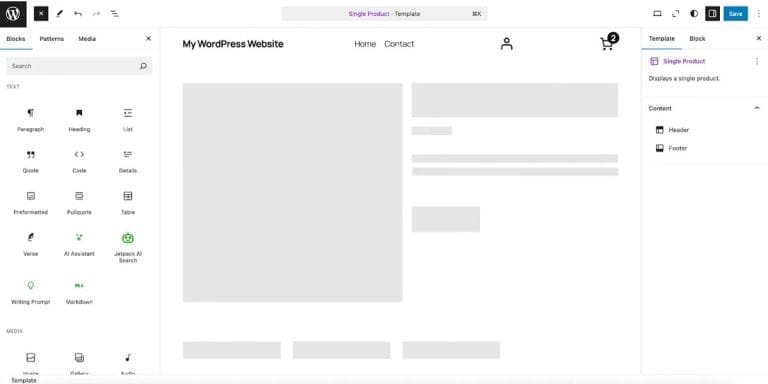

When starting a WooCommerce store, one of your first steps is choosing a theme. Not only is a theme required to begin the site creation process, it also impacts everything about your website’s design and functionality